Atomic Transactions

Atomic Transactions

The mutual exclusion of critical sections ensures that the critical sections are executed atomically. That is, if two critical sections are executed concurrently, the result is equivalent to their sequential execution in some unknown order. Although this property is useful in many application domains, in many cases we would like to make sure that a critical section forms a single logical unit of work that either is performed in its entirety or is not performed at all.

An example is funds transfer, in which one account is debited and another is credited. Clearly, it is essential for data consistency either that both the credit and debit occur or that neither occur. Consistency of data, along with storage and retrieval of data, is a concern often associated with database systems. Recently, there has been an upsurge of interest in using database-systems techniques in operating systems.

Operating systems can be viewed as manipulators of data; as such, they can benefit from the advanced techniques and models available from database research. For instance, many of the ad hoc techniques used in operating systems to manage files could be more flexible and powerful if more formal database methods were used in their place. In Sections 6.9.2 to 6.9.4, we describe some of these database techniques and explain how they can be used by operating systems. First, however, we deal with the general issue of transaction atomicity. It is this property that the database techniques are meant to address.

System Model

A collection of instructions (or operations) that performs a single logical function is called a transaction. A major issue in processing transactions is the preservation of atomicity despite the possibility of failures within the computer system. We can think of a transaction as a program unit that accesses and perhaps updates various data items that reside on a disk within some files. From our point of view, such a transaction is simply a sequence of read and write operations terminated by either a commit operation or an abort operation.

A commit operation signifies that the transaction has terminated its execution successfully, whereas an abort operation signifies that the transaction has ended its normal execution due to some logical error or a system failure. If a terminated transaction has completed its execution successfully, it is committed; otherwise, it is aborted. Since an aborted transaction may already have modified the data that it has accessed, the state of these data may not be the same as it would have been if the transaction had executed atomically. So that atomicity is ensured an aborted transaction must have no effect on the state of the data that it has already modified. Thus, the state of the data accessed by an aborted transaction must be restored to what it was just before the transaction started executing.

We say that such a transaction has been rolled back. It is part of the responsibility of the system to ensure this property. To determine how the system should ensure atomicity, we need first to identify the properties of devices used for storing the various data accessed by the transactions. Various types of storage media are distinguished by their relative speed, capacity, and resilience to failure.

• Volatile storage. Information residing in volatile storage does not usually survive system crashes. Examples of such storage are main and cache memory. Access to volatile storage is extremely fast, both because of the speed of the memory access itself and because it is possible to access directly any data item in volatile storage.

• Nonvolatile storage. Information residing in nonvolatile storage usually survives system crashes. Examples of media for such storage are disks and magnetic tapes. Disks are more reliable than main memory but less reliable than magnetic tapes. Both disks and tapes, however, are subject to failure, which may result in loss of information. Currently, nonvolatile storage is slower than volatile storage by several orders of magnitude, because disk and tape devices are electromechanical and require physical motion to access data.

• Stable storage. Information residing in stable storage is never lost (never should be taken with a grain of salt, since theoretically such absolutes cannot be guaranteed). To implement an approximation of such storage, we need to replicate information in several nonvolatile storage caches (usually disk) with independent failure modes and to update the information in a controlled manner (Section 12.8). Here, we are concerned only with ensuring transaction atomicity in an environment where failures result in the loss of information on volatile storage.

Log-Based Recovery

One way to ensure atomicity is to record, on stable storage, information describing all the modifications made by the transaction to the various data it accesses. The most widely used method for achieving this form of recording is write-ahead logging. Here, the system maintains, on stable storage, a data structure called the log. Each log record describes a single operation of a transaction write and has the following fields:

• Transaction name. The unique name of the transaction that performed the write operation

• Data item name. The unique name of the data item written

• Old value. The value of the data item prior to the write operation

• New value.

The value that the data item will have after the write Other special log records exist to record significant events during transaction processing, such as the start of a transaction and the commit or abort of a transaction. Before a transaction T, starts its execution, the record < T, starts> is written to the log. During its execution, any write operation by T, is preceded by the writing of the appropriate new record to the log. When 7/ commits, the record < T, commits> is written to the log. Because the information in the log is used in reconstructing the state of the data items accessed by the various transactions, we cannot allow the actual update to a data item to take place before the corresponding log record is written out to stable storage. We therefore require that, prior to execution of a write(X) operation, the log records corresponding to X be written onto stable storage. Note the performance penalty inherent in this system. Two physical writes are required for every logical write requested. Also, more storage is needed, both for the data themselves and for the log recording the changes.

In cases where the data are extremely important and fast failure recovery is necessary, the price is worth the functionality. Using the log, the system can handle any failure that does not result in the loss of information on nonvolatile storage. The recovery algorithm uses two procedures:

• undo(TJ), which restores the value of all data updated by transaction T, to the old values

• redo(Tj), which sets the value of all data updated by transaction T; to the new values The set of data updated by 7} and their respective old and new values can be found in the log. The undo and redo operations must be idempotent (that is, multiple executions must have the same result as does one execution) to guarantee correct behavior, even if a failure occurs during the recovery process. If a transaction 7} aborts, then we can restore the state of the data that it has updated by simply executing undo(7}).

If a system failure occurs, we restore the state of all updated data by consulting the log to determine which transactions need to be redone and which need to be undone. This classification of transactions is accomplished as follows:

• Transaction 7, needs to be undone if the log contains the < T, starts> record but does not contain the < T-, commits> record.

• Transaction T, needs to be redone if the log contains both the < T, starts> and the < 7/ commits> records.

Checkpoints

When a system failure occurs, we must consult the log to determine those transactions that need to be redone and those that need to be undone. In principle, we need to search the entire log to make these determinations. There are two major drawbacks to this approach: 6.9 Atomic Transactions 225 1. The searching process is time consuming. » 2. Most of the transactions that, according to our algorithm, need to be redone have already actually updated the data that the log says they need to modify. Although redoing the data modifications will cause no harm (due to idempotency), it will nevertheless cause recovery to take longer.

To reduce these types of overhead, we introduce the concept of checkpoints. During execution, the system maintains the write-ahead log. In addition, the system periodically performs checkpoints that require the following sequence of actions to take place:

1. Output all log records currently residing in volatile storage (usually main memory) onto stable storage.

2. Output all modified data residing in volatile storage to the stable storage.

3. Output a log record onto stable storage. The presence of a record in the log allows the system to streamline its recovery procedure. Consider a transaction Tj that committed prior to the checkpoint.

The < T, commit s> record appears in the log before the record, and then finding the subsequent < Ti start > record. Once transaction Tj has been identified, the redo and undo operations need be applied only to transaction Tj and all transactions Tj that started executing after transaction Tj-. We'll call these transactions set T. The remainder of the log can thus be ignored.

The recovery operations that are required are as follows: a For all transactions Tjt in T such that the record < Tj;- commits> appears in the log, execute redo(T)t). • For all transactions Tj- in T that have no < Ti- commits> record in the log, execute undo(TO- 6.9.4 Concurrent Atomic Transactions We have been considering an environment in which only one transaction can be executing at a time. We now turn to the case where multiple transactions are active simultaneously. Because each transaction is atomic, the concurrent execution of transactions must be equivalent to the case where these transactions are executed serially in some arbitrary order. This property, called serializability, can be maintained by simply executing each transaction within 226 Chapter 6 Process Synchronization a critical section. That is, all transactions share a common semaphore mutex, which is initialized to 1.

When a transaction starts executing, its first action is to execute wa.±t(mutex). After the transaction either commits or aborts, it executes signal(/?z«ta')- Although this scheme ensures the atomicity of all concurrently executing transactions, it is nevertheless too restrictive. As we shall see, in many cases we can allow transactions to overlap their execution while maintaining serializability. A number of different concurrency-control algorithms ensure serializability. These algorithms are described below.

Serializability

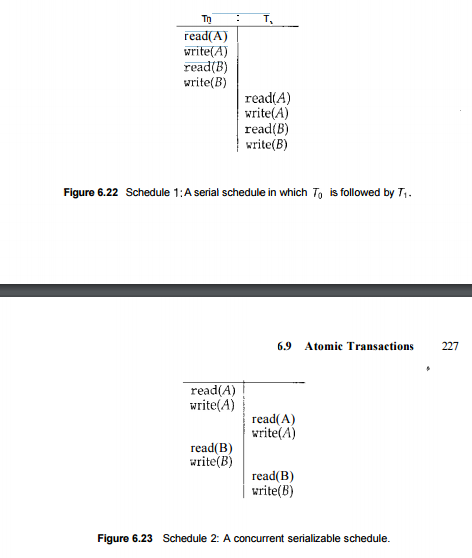

Consider a system with two data items, A and B, that are both read and written by two transactions, To and T\. Suppose that these transactions are executed atomically in the order To followed by T\. This execution sequence, which is called a schedule, is represented in Figure 6.22. In schedule 1 of Figure 6.22, the sequence of instruction steps is in chronological order from top to bottom, with instructions of To appearing in the left column and instructions of T\ appearing in the right column. A schedule in which each transaction is executed atomically is called a serial schedule. A serial schedule consists of a sequence of instructions from various transactions wherein the instructions belonging to a particular transaction appear together.

Thus, for a set of n transactions, there exist n\ different valid serial schedules. Each serial schedule is correct, because it is equivalent to the atomic execution of the various participating transactions in some arbitrary order. If we allow the two transactions to overlap their execution, then the resulting schedule is no longer serial. A nonserial schedule does not necessarily imply an incorrect execution (that is, an execution that is not equivalent to one represented by a serial schedule).

To see that this is the case, we need to define the notion of conflicting operations. Consider a schedule S in which there are two consecutive operations O,- and Oj of transactions T, and Tj, respectively. We say that O, and Oj conflict if they access the same data item and at least one of them is a write operation. To illustrate the concept of conflicting operations, we consider the nonserial schedule 2 of Figure 6.23. The write(A) operation of To conflicts with the read(A) operation of Ti.

However, the write(A) operation of T\ does not conflict with the read(B) operation of To, because the two operations access different data items. Let Oj and Oj be consecutive operations of a schedule S. If O, and O; are operations of different transactions and O-, and Oj do not conflict, then we can swap the order of O, and 0/ to produce a new schedule S'. We expect S to be equivalent to S', as all operations appear in the same order in both schedules, except for O, and Oj, whose order does not matter. We can illustrate the swapping idea by considering again schedule 2 of Figure 6.23.

As the write(A) operation of T\ does not conflict with the read(B) operation of To, we can swap these operations to generate an equivalent schedule. Regardless of the initial system state, both schedules produce the same final system state. Continuing with this procedure of swapping nonconflicting operations, we get:

• Swap the read(B) operation of TQ with the read(A) operation of T\.

• Swap the write(B) operation of To with the write(A) operation of T\.

• Swap the write(B) operation of To with the read(A) operation of T\. The final result of these swaps is schedule 1 in Figure 6.22, which is a serial schedule. Thus, we have shown that schedule 2 is equivalent to a serial schedule. This result implies that, regardless of the initial system state, schedule 2 will produce the same final state as will some serial schedule. If a schedule S can be transformed into a serial schedule S' by a series of swaps of nonconflicting operations, we say that a schedule S is conflict serializable. Thus, schedule 2 is conflict serializable, because it can be transformed into the serial schedule 1

Locking Protocol

One way to ensure serializability is to associate with each data item a lock and to require that each transaction follow a locking protocol that governs how locks are acquired and released. There are various modes in which a data item can be locked. In this section, we restrict our attention to two modes: 228 Chapter 6 Process Synchronization

• Shared. If a transaction X, has obtained a shared-mode lock (denoted by S) on data item Q, then 7] can read this item but cannot write Q

• Exclusive. If a transaction T, has obtained an exclusive-mode lock (denoted by X) on data item Q, then 7} can both read and write Q. We require that every transaction request a lock in an appropriate mode on data item Q, depending on the type of operations it will perform on Q. To access data item Q, transaction 7} must first lock Q in the appropriate mode. If Q is not currently locked, then the lock is granted, and T; can now access it. However, if the data item Q is currently locked by some other transaction, then 7) may have to wait. More specifically, suppose that 7} requests an exclusive lock on Q. In this case, 7] must wait until the lock on Q is released. If T, requests a shared lock on Q, then 7) must wait if Q is locked in exclusive mode.

Otherwise, it can obtain the lock and access Q. Notice that this scheme is quite similar to the readers-writers algorithm discussed in Section 6.6.2. A transaction may unlock a data item that it locked at an earlier point. It must, however, hold a lock on a data item as long as it accesses that item. Moreover, it is not always desirable for a transaction to unlock a data item immediately after its last access of that data item, because serializability may not be ensured.

One protocol that ensures serializability is the two-phase locking protocol. This protocol requires that each transaction issue lock and unlock requests in two phases:

• Growing phase. A transaction may obtain locks but may not release any lock.

• Shrinking phase. A transaction may release locks but may not obtain any new locks. Initially, a transaction is in the growing phase. The transaction acquires locks as needed.

Once the transaction releases a lock, it enters the shrinking phase, and no more lock requests can be issued. The two-phase locking protocol ensures conflict serializability (Exercise 6.25). It does not, however, ensure freedom from deadlock. In addition, it is possible that, for a given set of transactions, there are conflict-serializable schedules that cannot be obtained by use of the two-phase locking protocol. However, to improve performance over two-phase locking, we need either to have additional information about the transactions or to impose some structure or ordering on the set of data.

Timestamp-Based Protocols

In the locking protocols described above, the order followed by pairs of conflicting transactions is determined at execution time by the first lock that both request and that involves incompatible modes. Another method for determining the serializability order is to select an order in advance. The most common method for doing so is to use a timestamp ordering scheme. With each transaction T, in the system, we associate a unique fixed timestamp, denoted by TS(T/). This timestamp is assigned by the system 6.9 Atomic Transactions 229 before the transaction T, starts execution. If a transaction 7} has been assigned timestamp TS(Tj-), and later a new transaction 7) enters the system, then TS(7}) < TS(Tj). There are two simple methods for implementing this scheme:

• Use the value of the system clock as the timestamp; that is, a transaction's timestamp is equal to the value of the clock when the transaction enters the system. This method will not work for transactions that occur on separate systems or for processors that do not share a clock.

• Use a logical counter as the timestamp; that is, a transaction's timestamp is equal to the value of the counter when the transaction enters the system. The counter is incremented after a new timestamp is assigned. The timestamps of the transactions determine the serializability order. Thus, if TS(T,) < TS(T,), then the system must ensure that the produced schedule is equivalent to a serial schedule in which transaction T, appears before transaction T,. To implement this scheme, we associate with each data item Q two timestamp values:

• W-timestamp(Q) denotes the largest timestamp of any transaction that successfully executed write(Q).

• R-timestamp(Q) denotes the largest timestamp of any transaction that successfully executed read(Q). These timestamps are updated whenever a new read(Q) or write(Q) instruction is executed. The timestamp-ordering protocol ensures that any conflicting read and write operations are executed in timestamp order. This protocol operates as follows:

• Suppose that transaction T,- issues read(Q): o If TS(T,) < W-timestamp(), then T, needs to read a value of Q that was already overwritten. Hence, the read operation is rejected, and Tj- is rolled back. o If TS(TJ) > W-timestamp(Q), then the read operation is executed, and R-timestamp(Q) is set to the maximum of R-timestamp(Q) and TS(T,).

• Suppose that transaction 7} issues write(Q): o If TS(T,) < R-timestamp(Q), then the value of Q that 7} is producing was needed previously and T,- assumed that this value would never be produced. Hence, the write operation is rejected, and 7} is rolled back. => If TS(T,) < W-timestamp(Q), then T, is attempting to write an obsolete value of Q. Hence, this write operation is rejected, and T, is rolled back. o Otherwise, the write operation is executed. A transaction T, that is rolled back as a result of the issuing of either a read or write operation is assigned a new timestamp and is restarted.

To illustrate this protocol, consider schedule 3 of Figure 6.24, which includes transactions % and T3. We assume that a transaction is assigned a timestamp immediately before its first instruction. Thus, in schedule 3, TS(T2) < TS(T3), and the schedule is possible under the timestamp protocol. This execution can also be produced by the two-phase locking protocol. However, some schedules are possible under the two-phase locking protocol but not under the timestamp protocol, and vice versa. The timestamp protocol ensures conflict serializability. This capability follows from the fact that conflicting operations are processed in timestamp order. The protocol also ensures freedom from deadlock, because no transaction ever waits.

Frequently Asked Questions

Recommended Posts:

- Operating System Concepts ( Multi tasking, multi programming, multi-user, Multi-threading )

- Different Types of Operating Systems

- Batch Operating Systems

- Time sharing operating systems

- Distributed Operating Systems

- Network Operating System

- Real Time operating System

- Various Operating system services

- Architectures of Operating System

- Monolithic architecture - operating system

- Layered Architecture of Operating System

- Microkernel Architecture of operating system

- Hybrid Architecture of Operating System

- System Programs and Calls

- Process Management - Process concept